模块一 私有云(30分) 企业首先完成私有云平台搭建和运维,私有云平台提供云主机、云网络、云存储等基础架构云服务,并开发自动化运维程序。

任务1 私有云服务搭建(5分) 1.1.1 基础环境配置 1.控制节点主机名为controller,设置计算节点主机名为compute;

1 2 3 4 5 6 7 8 9 10 # 控制节点 [root@localhost ~]# hostnamectl set-hostname controller [root@localhost ~]# bash [root@controller ~]# # 计算节点 [root@localhost ~]# hostnamectl set-hostname compute [root@localhost ~]# bash [root@compute ~]#

2.hosts文件将IP地址映射为主机名。

1 2 3 4 5 6 # 全部节点 [root@controller ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.129.1.10 controller 172.129.1.11 compute

1.1.2 yum源配置

使用提供的http服务地址,分别设置controller节点和compute节点的yum源文件http.repo。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 # [root@controller ~]# cat /etc/yum.repos.d/local.repo [centos] name=centos baseurl=file:///opt/centos gpgcheck=0 enabled=1 [iaas] name=openstack baseurl=file:///opt/openstack/iaas-repo gpgcheck=0 enabled=1 [root@compute yum.repos.d]# cat /etc/yum.repos.d/fto.repo [centos] name=centos baseurl=ftp://controller/centos gpgcheck=0 enabled=1 [iaas] name=openstack baseurl=ftp://controller/openstack/iaas-repo gpgcheck=0 enabled=1

1.1.3 配置无秘钥ssh

配置controller节点可以无秘钥访问compute节点。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 [root@controller ~]# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:gyXwgY44hJYX3AgT2fexXMrZm2h5PSO7gjKvLkqaUdQ root@controller The key's randomart image is: +---[RSA 2048]----+ |.+B.=.. | |.=.*.= o . | |o.ooE * O | |o.. . @ . | | .. . S + | | . + * + | |.. o . o o | |o+ o . . . | |= oo=. ... | +----[SHA256]-----+ [root@controller ~]# ssh-copy-id root@compute /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" The authenticity of host 'compute (172.129.1.11)' can't be established. ECDSA key fingerprint is SHA256:WgjZdcpq8bqsV/iKGbAQHGzRcdjUspMRWZBqg3iXV28. ECDSA key fingerprint is MD5:fc:3a:85:49:87:63:d6:7f:f4:19:b5:cd:ce:f7:f2:88. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@compute's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'root@compute'" and check to make sure that only the key(s) you wanted were added.

1.1.4 基础安装

在控制节点和计算节点上分别安装openstack-iaas软件包。

1 [root@controller ~]# yum install -y openstack-iaas

1.1.5 数据库安装与调优

在控制节点上使用安装Mariadb、RabbitMQ等服务。并进行相关操作。

1 [root@controller ~]# iaas-install-mysql.sh

扩展

数据库调优:

在 controller 节点上使用 iaas-install-mysql.sh 脚本安装 Mariadb、Memcached、RabbitMQ

1.设置数据库支持大小写;

3.设置数据库的 log buffer 为 64MB;

1 2 3 4 5 6 7 8 [root@controller ~]# vim /etc/my.cnf [mysqld] lower_case_table_names =1 #数据库支持大小写 innodb_buffer_pool_size = 4G #数据库缓存 innodb_log_buffer_size = 64MB #设置数据库的log buffer即redo日志缓冲 innodb_log_file_size = 256MB #设置数据库的redo log即redo日志大小 innodb_log_files_in_group = 2 #数据库的redo log文件组即redo日志的个数配置

1.1.6 Keystone服务安装与使用

在控制节点上安装Keystone服务并创建用户。

1 [root@controller ~]# iaas-install-keystone.sh

1.1.7 Glance安装与使用

在控制节点上安装Glance 服务。上传镜像至平台,并设置镜像启动的要求参数。

1 [root@controller ~]# iaas-install-glance.sh

1.1.8 Nova安装

在控制节点和计算节点上分别安装Nova服务。安装完成后,完成Nova相关配置。

1 2 3 4 5 6 7 # [root@controller ~]# iaas-install-placement.sh [root@controller ~]# iaas-install-nova-controller.sh [root@compute ~]# iaas-install-nova-compute.sh

拓展

安装完成后,请修改 nova 相关配置文件,解决因等待时间过长而导致虚拟机启动超时从而获取不

到 IP 地址而报错失败的问题

1 2 3 4 5 6 7 8 9 10 11 12 13 [root@controller ~]# iaas-install-placement.sh [root@controller ~]# iaas-install-nova-controller.sh [root@controller ~]# vim /etc/nova/nova.conf # vif_plugging_is_fatal=true 改为 vif_plugging_is_fatal=false # 重启nova-* systemctl restart openstack-nova*

1.1.9 Neutron安装

在控制和计算节点上正确安装Neutron服务。

1 2 [root@controller ~]# iaas-install-neutron-controller.sh [root@compute ~]# iaas-install-neutron-compute.sh

1.1.10 Dashboard安装

在控制节点上安装Dashboard服务。安装完成后,将Dashboard中的 Django数据修改为存储在文件中。

1 2 3 4 5 6 7 8 [root@controller ~]# iaas-install-dashboard.sh [root@controller ~]# vim /etc/openstack-dashboard/local_settings SESSION_ENGINE = 'django.contrib.sessions.backends.cache' 改为 SESSION_ENGINE = 'django.contrib.sessions.backends.file' # 验证 systemctl restart httpd

1.1.11 Swift安装

在控制节点和计算节点上分别安装Swift服务。安装完成后,将cirros镜像进行分片存储。

1 2 3 [root@controller ~]# iaas-install-swift-controller.sh [root@compute ~]# iaas-install-swift-compute.sh

拓展:

在 控 制 节 点 和 计 算 节 点 上 分 别 使 用 iaas-install-swift-controller.sh 和iaas-install-swift-compute.sh 脚本安装 Swift 服务。安装完成后,使用命令创建一个名叫examcontainer 的容器,将 cirros-0.3.4-x86_64-disk.img 镜像上传到 examcontainer 容器中,并设置分段存放,每一段大小为 10M

1 2 3 4 5 6 7 8 9 10 11 12 [root@controller ~]# ls anaconda-ks.cfg cirros-0.3.4-x86_64-disk.img logininfo.txt [root@controller ~]# swift post examcontainers [root@controller ~]# swift upload examcontaiers -S 10485760 cirros-0.3.4-x86_64-disk.img cirros-0.3.4-x86_64-disk.img segment 1 cirros-0.3.4-x86_64-disk.img segment 0 cirros-0.3.4-x86_64-disk.img [root@controller ~]# du -sh cirros-0.3.4-x86_64-disk.img 13M cirros-0.3.4-x86_64-disk.img [root@controller ~]# # 因为镜像就13M,所有存储为两段

1.1.12 Cinder创建硬盘

在控制节点和计算节点分别安装Cinder服务,请在计算节点,对块存储进行扩容操作。

1 2 [root@cobtroller ~]# iaas-install-cinder-controller.sh [root@compute ~]# iaas-install-cinder-compute.sh

扩展

请在计算节点,对块存储进行扩容操作, 即在计算节点再分出一个 5G 的分区,加入到 cinder 块存储的后端存储中去

1 2 3 4 5 6 7 8 #创建物理卷 pvcreate /dev/vdb4 #扩展cinder-volume卷组 vgextend cinder-volumes /dev/vdb4 #验证 [root@compute ~]# vgdisplay

1.1.13 Manila服务安装与使用

在控制和计算节点上分别在控制节点和计算节点安装Manila服务。

1 2 [root@controller ~]# iaas-install-manila-controller.sh [root@compute ~]# iaas-install-manila-compute.sh

任务2 私有云服务运维(15分) 1.2.1 OpenStack开放镜像权限

在admin项目中存在glance-cirros镜像文件,将glance-cirros镜像指定demo项目进行共享使用。

1 [root@controller ~]# openstack image set glance-cirros --shared

1.2.2 SkyWalking 应用部署

申请一台云主机,使用提供的软件包安装Elasticsearch服务和SkyWalking服务。再申请一台云主机,用于搭建gpmall商城应用,并配置SkyWalking 监控gpmall主机。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 # 将所需要的包进行上传 # elasticsearch部署 [root@controller ~]# rpm -ivh elasticsearch-7.0.0-x86_64.rpm [root@controller ~]# vi /etc/elasticsearch/elasticsearch.yml 需要配置的地方: cluster.name: yutao – 这个需要和skywalking中的elasticsearch配置保持一致 node.name: node-1 network.host: 0.0.0.0 http.port: 9200 (默认就是,可以不解开注释,我解开了) discovery.seed_hosts: [“127.0.0.1”] 一定要配置,不然找不到主节点 cluster.initial_master_nodes: [“node-1”] 一定要配置,不然找不到主节点 [root@controller ~]# systemctl enable --now elasticsearch # skywalking部署 storage: selector: elasticsearch7 elasticsearch: nameSpace: ${SW_NAMESPACE:"yutao"} clusterNodes: ${SW_STORAGE_ES_CLUSTER_NODES:localhost:9200} protocol: ${SW_STORAGE_ES_HTTP_PROTOCOL:"http"} trustStorePath: ${SW_SW_STORAGE_ES_SSL_JKS_PATH:"../es_keystore.jks"} trustStorePass: ${SW_SW_STORAGE_ES_SSL_JKS_PASS:""} user: ${SW_ES_USER:""} password: ${SW_ES_PASSWORD:""} secretsManagementFile: ${SW_ES_SECRETS_MANAGEMENT_FILE:""} # Secrets management file in the properties format includes the username, password, which are managed by 3rd party tool. enablePackedDownsampling: ${SW_STORAGE_ENABLE_PACKED_DOWNSAMPLING:true} # Hour and Day metrics will be merged into minute index. dayStep: ${SW_STORAGE_DAY_STEP:1} # Represent the number of days in the one minute/hour/day index. indexShardsNumber: ${SW_STORAGE_ES_INDEX_SHARDS_NUMBER:2} indexReplicasNumber: ${SW_STORAGE_ES_INDEX_REPLICAS_NUMBER:0} # Those data TTL settings will override the same settings in core module. recordDataTTL: ${SW_STORAGE_ES_RECORD_DATA_TTL:7} # Unit is day otherMetricsDataTTL: ${SW_STORAGE_ES_OTHER_METRIC_DATA_TTL:45} # Unit is day monthMetricsDataTTL: ${SW_STORAGE_ES_MONTH_METRIC_DATA_TTL:18} # Unit is month # Batch process setting, refer to https://www.elastic.co/guide/en/elasticsearch/client/java-api/5.5/java-docs-bulk-processor.html bulkActions: ${SW_STORAGE_ES_BULK_ACTIONS:1000} # Execute the bulk every 1000 requests flushInterval: ${SW_STORAGE_ES_FLUSH_INTERVAL:10} # flush the bulk every 10 seconds whatever the number of requests concurrentRequests: ${SW_STORAGE_ES_CONCURRENT_REQUESTS:2} # the number of concurrent requests resultWindowMaxSize: ${SW_STORAGE_ES_QUERY_MAX_WINDOW_SIZE:10000} metadataQueryMaxSize: ${SW_STORAGE_ES_QUERY_MAX_SIZE:5000} segmentQueryMaxSize: ${SW_STORAGE_ES_QUERY_SEGMENT_SIZE:200} profileTaskQueryMaxSize: ${SW_STORAGE_ES_QUERY_PROFILE_TASK_SIZE:200} advanced: ${SW_STORAGE_ES_ADVANCED:""} elasticsearch7: nameSpace: ${SW_NAMESPACE:"yutao"} clusterNodes: ${SW_STORAGE_ES_CLUSTER_NODES:localhost:9200} protocol: ${SW_STORAGE_ES_HTTP_PROTOCOL:"http"} trustStorePath: ${SW_SW_STORAGE_ES_SSL_JKS_PATH:"../es_keystore.jks"} trustStorePass: ${SW_SW_STORAGE_ES_SSL_JKS_PASS:""} enablePackedDownsampling: ${SW_STORAGE_ENABLE_PACKED_DOWNSAMPLING:true} # Hour and Day metrics will be merged into minute index. dayStep: ${SW_STORAGE_DAY_STEP:1} # Represent the number of days in the one minute/hour/day index. user: ${SW_ES_USER:""} password: ${SW_ES_PASSWORD:""} secretsManagementFile: ${SW_ES_SECRETS_MANAGEMENT_FILE:""} # Secrets management file in the properties format includes the username, password, which are managed by 3rd party tool. indexShardsNumber: ${SW_STORAGE_ES_INDEX_SHARDS_NUMBER:2} indexReplicasNumber: ${SW_STORAGE_ES_INDEX_REPLICAS_NUMBER:0} # Those data TTL settings will override the same settings in core module. recordDataTTL: ${SW_STORAGE_ES_RECORD_DATA_TTL:7} # Unit is day otherMetricsDataTTL: ${SW_STORAGE_ES_OTHER_METRIC_DATA_TTL:45} # Unit is day monthMetricsDataTTL: ${SW_STORAGE_ES_MONTH_METRIC_DATA_TTL:18} # Unit is month # Batch process setting, refer to https://www.elastic.co/guide/en/elasticsearch/client/java-api/5.5/java-docs-bulk-processor.html bulkActions: ${SW_STORAGE_ES_BULK_ACTIONS:1000} # Execute the bulk every 1000 requests flushInterval: ${SW_STORAGE_ES_FLUSH_INTERVAL:10} # flush the bulk every 10 seconds whatever the number of requests concurrentRequests: ${SW_STORAGE_ES_CONCURRENT_REQUESTS:2} # the number of concurrent requests resultWindowMaxSize: ${SW_STORAGE_ES_QUERY_MAX_WINDOW_SIZE:10000} metadataQueryMaxSize: ${SW_STORAGE_ES_QUERY_MAX_SIZE:5000} segmentQueryMaxSize: ${SW_STORAGE_ES_QUERY_SEGMENT_SIZE:200} profileTaskQueryMaxSize: ${SW_STORAGE_ES_QUERY_PROFILE_TASK_SIZE:200} advanced: ${SW_STORAGE_ES_ADVANCED:""} # h2: # driver: ${SW_STORAGE_H2_DRIVER:org.h2.jdbcx.JdbcDataSource} # url: ${SW_STORAGE_H2_URL:jdbc:h2:mem:skywalking-oap-db} # user: ${SW_STORAGE_H2_USER:sa} # metadataQueryMaxSize: ${SW_STORAGE_H2_QUERY_MAX_SIZE:5000} # # 因为安装的是elasticsearch7所以这里选的是elasticsearch7 storage: selector: elasticsearch7 elasticsearch7: nameSpace: ${SW_NAMESPACE:"yutao"} # 这和上面elasticsearch配置中的cluster.name保存一直 [root@controller ~]# bin/oapService.sh SkyWalking OAP started successfully! [root@controller ~]# bin/webappService.sh SkyWalking Web Application started successfully! # [root@controller apache-skywalking-apm-bin-es7]# vi webapp/webapp.yml

1.2.3 OpenStack镜像压缩

在HTTP文件服务器中存在一个镜像为CentOS7.5-compress.qcow2的镜像,请对该镜像进行压缩操作。

1 2 3 4 5 6 7 8 9 10 11 [root@controller ~]# du -sh CentOS7.5-compress.qcow2 892M CentOS7.5-compress.qcow2 [root@controller ~]# qemu-img convert -c -O qcow2 CentOS7.5-compress.qcow2 CentOS7.5-compress2.qcow2 -c 压缩 -O qcow2 输出格式为 qcow2 CentOS7.5-compress.qcow2 被压缩的文件 CentOS7.5-compress2.qcow2 压缩完成后文件 [root@controller ~]# du -sh CentOS7.5-compress2.qcow2 405M CentOS7.5-compress2.qcow2

1.2.4 Glance对接Cinder存储

在自行搭建的OpenStack平台中修改相关参数,使Glance可以使用Cinder作为后端存储。

1.2.5 使用Heat模板创建容器

在自行搭建的OpenStack私有云平台上,在/root目录下编写Heat模板文件,要求执行yaml文件可以创建名为heat-swift的容器。

1 2 3 4 5 6 7 8 9 10 [root@controller ~]# cat create_swift.yaml heat_template_version: 2018-08-31 resources: user: type: OS::Swift::Container properties: name: heat-swift # 执行yaml文件 [root@controller ~]# openstack stack create heat-swift -t create_swift.yaml

1.2.6 Nova清除缓存

在OpenStack平台上,修改相关配置,让长时间不用的镜像缓存在过一定的时间后会被自动删除。

1.2.7 Redis集群部署。

部署Redis集群,Redis的一主二从三哨兵架构。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 # 配置redis # 本实验通过epel源下载redis # [root@localhost ~]# yum install -y redis [root@localhost ~]# vi /etc/redis.conf 1.先在网络部分注释掉单机连接那一行,即注释掉bind 127.0.0.1 2.同样我们要将后台运行打开:daemonize no,设置为yes。 3.将 保护模式关闭:protected-mode yes 改为:protected-mode no 4.配置密码requirepass的密码改为123456 5.配置连接master的密码masterauth 123456 # 配置连接master(主节点无需) [root@localhost ~]# redis-cli -p 6379 > slaveof 127.0.0.1 6379 > info replication # 主节点查看状态 [root@localhost ~]# redis-cli -p 6379 > info replication # 配置哨兵 [root@localhost ~]# vi /etc/redis-sentinel.conf protected-mode no daemonize yes sentinel monitor mymaster 主节点ip 6379 2 # [root@localhost ~]# redis-sentinel /etc/redis-sentinel.conf # 验证 [root@localhost ~]# redis-cli -p 26379 127.0.0.1:26379> info sentinel # Sentinel sentinel_masters:1 sentinel_tilt:0 sentinel_running_scripts:0 sentinel_scripts_queue_length:0 sentinel_simulate_failure_flags:0 master0:name=mymaster,status=sdown,address=172.129.1.50:6379,slaves=2,sentinels=3

1.2.8 Redis AOF调优

修改在Redis相关配置,避免AOF文件过大,Redis会进行AOF重写。

1 2 3 4 5 6 7 8 9 10 11 [root@master ~]# vim /etc/redis.conf no-appendfsync-on-rewrite no aof-rewrite-incremental-fsync yes # 连个参数分别改为 aof-rewrite-incremental-fsync no no-appendfsync-on-rewrite yes # 配置就是设置为yes 时候,在aof重写期间会停止aof的fsync操作 [root@master ~]# systemctl restart redis

1.2.9 JumpServer堡垒机部署

使用提供的软件包安装JumpServer堡垒机服务,并配置使用该堡垒机对接自己安装的控制和计算节点。

1 2 [root @jumpserver ~] anaconda-ks .cfg jumpserver.tar.gz original-ks .cfg

解压软件包jumpserver.tar.gz至/opt目录下

1 2 3 [root @jumpserver ~] [root @jumpserver ~] compose config docker docker.service images jumpserver-repo static.env

将默认Yum源移至其他目录,创建本地Yum源文件

1 2 3 4 5 6 7 8 9 10 11 [root @jumpserver ~] [root @jumpserver ~] [jumpserver ] name=jumpserver baseurl=file:///opt/jumpserver-repo gpgcheck=0 enabled=1 EOF [root @jumpserver ~] repo id repo name status jumpserver jumpserver 2

安装Python数据库

安装配置Docker环境

1 2 3 4 5 6 7 8 [root @jumpserver opt ] [root @jumpserver opt ] [root @jumpserver opt ] [root @jumpserver opt ] [root @jumpserver opt ] [root @jumpserver opt ] Created symlink from /etc/systemd/system/multi-user .target.wants/docker.service to /etc/systemd/system/dock er.service.

验证服务状态

1 2 3 4 5 [root @jumpserver opt ] Docker version 18.06 .3 -ce , build d7080c1 [root @jumpserver opt ] docker-compose version 1.27 .4 , build 40524192 [root @jumpserver opt ]

安装Jumpserver服务

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [root @jumpserver images ] jumpserver_core_v2.11.4 .tar jumpserver_lion_v2.11.4 .tar jumpserver_nginx_alpine2.tar jumpserver_koko_v2.11.4 .tar jumpserver_luna_v2.11.4 .tar jumpserver_redis_6-alpine .tar jumpserver_lina_v2.11.4 .tar jumpserver_mysql_5.tar load.sh [root @jumpserver images ] docker load -i jumpserver_core_v2.11.4 .tar docker load -i jumpserver_koko_v2.11.4 .tar docker load -i jumpserver_lina_v2.11.4 .tar docker load -i jumpserver_lion_v2.11.4 .tar docker load -i jumpserver_luna_v2.11.4 .tar docker load -i jumpserver_mysql_5.tar docker load -i jumpserver_nginx_alpine2.tar docker load -i jumpserver_redis_6-alpine .tar [root @jumpserver images ]

创建Jumpserver服务组件目录

1 2 [root @jumpserver images ] [root @jumpserver images ]

生效环境变量static.env,使用所提供的脚本up.sh启动Jumpserver服务

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root @jumpserver compose ] config_static docker-compose-lb .yml docker-compose-network .yml down.sh docker-compose-app .yml docker-compose-mysql-internal .yml docker-compose-redis-internal .yml up.sh docker-compose-es .yml docker-compose-mysql .yml docker-compose-redis .yml docker-compose-external .yml docker-compose-network_ipv6 .yml docker-compose-task .yml [root @jumpserver compose ] [root @jumpserver compose ] Creating network "jms_net" with driver "bridge" Creating jms_redis ... done Creating jms_mysql ... done Creating jms_core ... done Creating jms_lina ... done Creating jms_nginx ... done Creating jms_celery ... done Creating jms_lion ... done Creating jms_luna ... done Creating jms_koko ... done [root @jumpserver compose ]

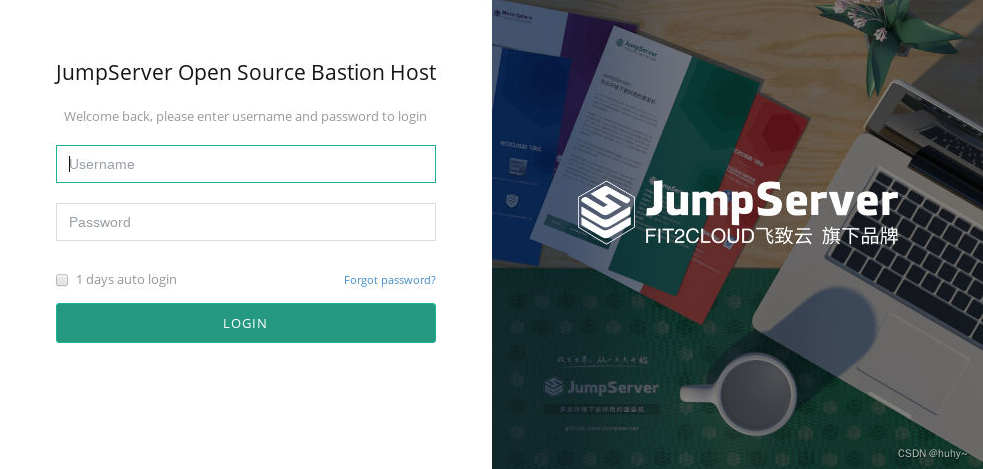

浏览器访问http://10.24.193.142,Jumpserver Web登录(admin/admin)

图1 Web登录

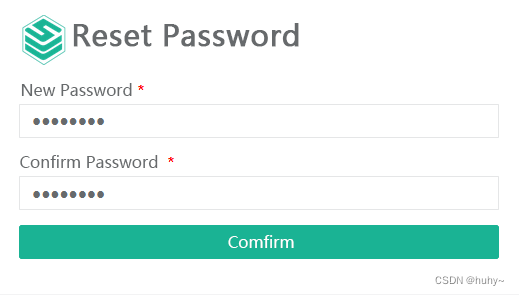

登录成功后,会提示设置新密码,如图2所示:

图2 修改密码

登录平台后,单击页面右上角下拉菜单切换中文字符设置,如图3所示:

图3 登录成功

至此Jumpserver安装完成。

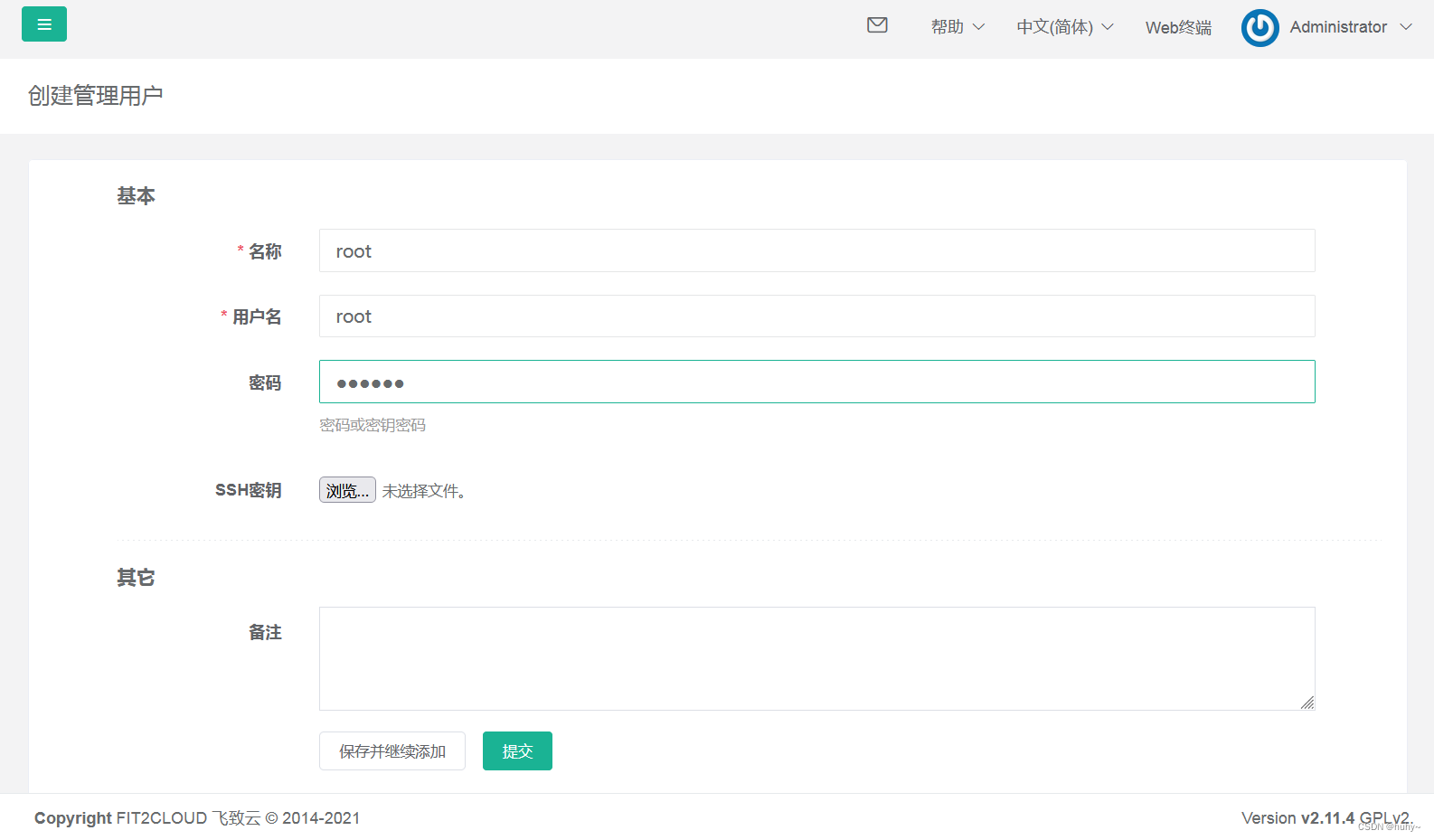

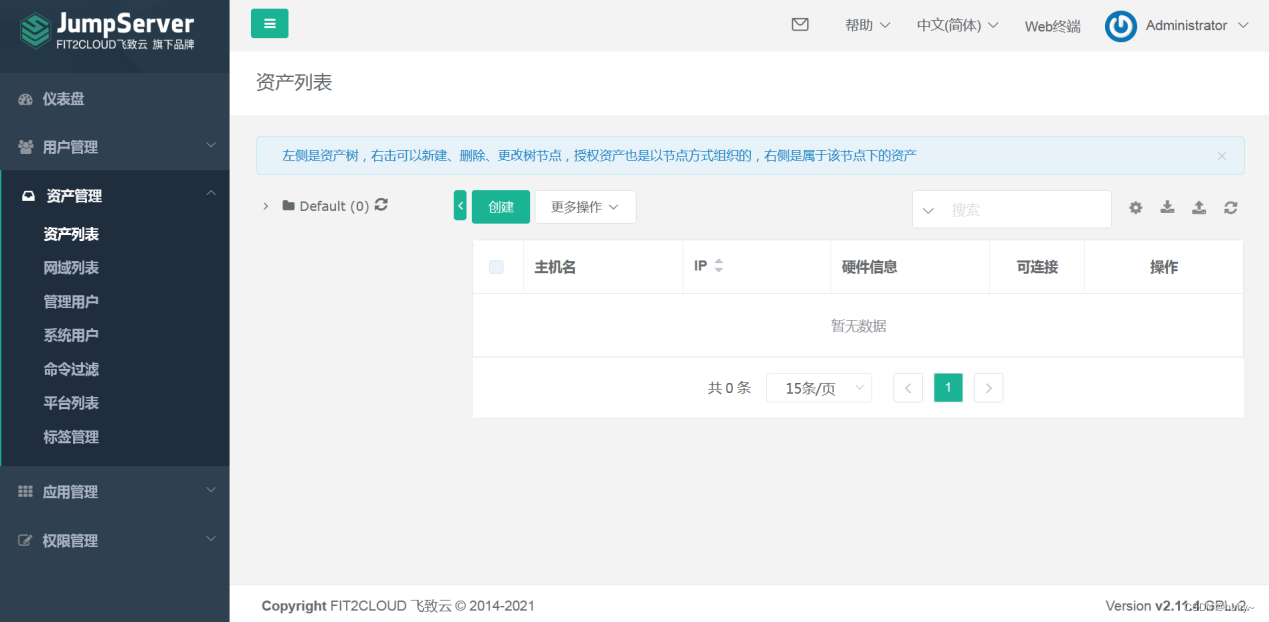

(6)管理资产

使用管理员admin用户登录Jumpserver管理平台,单击左侧导航栏,展开“资产管理”项目,选择“管理用户”,单击右侧“创建”按钮,如图4所示:

图4 管理用户

创建远程连接用户,用户名为root密码为“Abc@1234”,单击“提交”按钮进行创建,如图5所示:

图5 创建管理用户

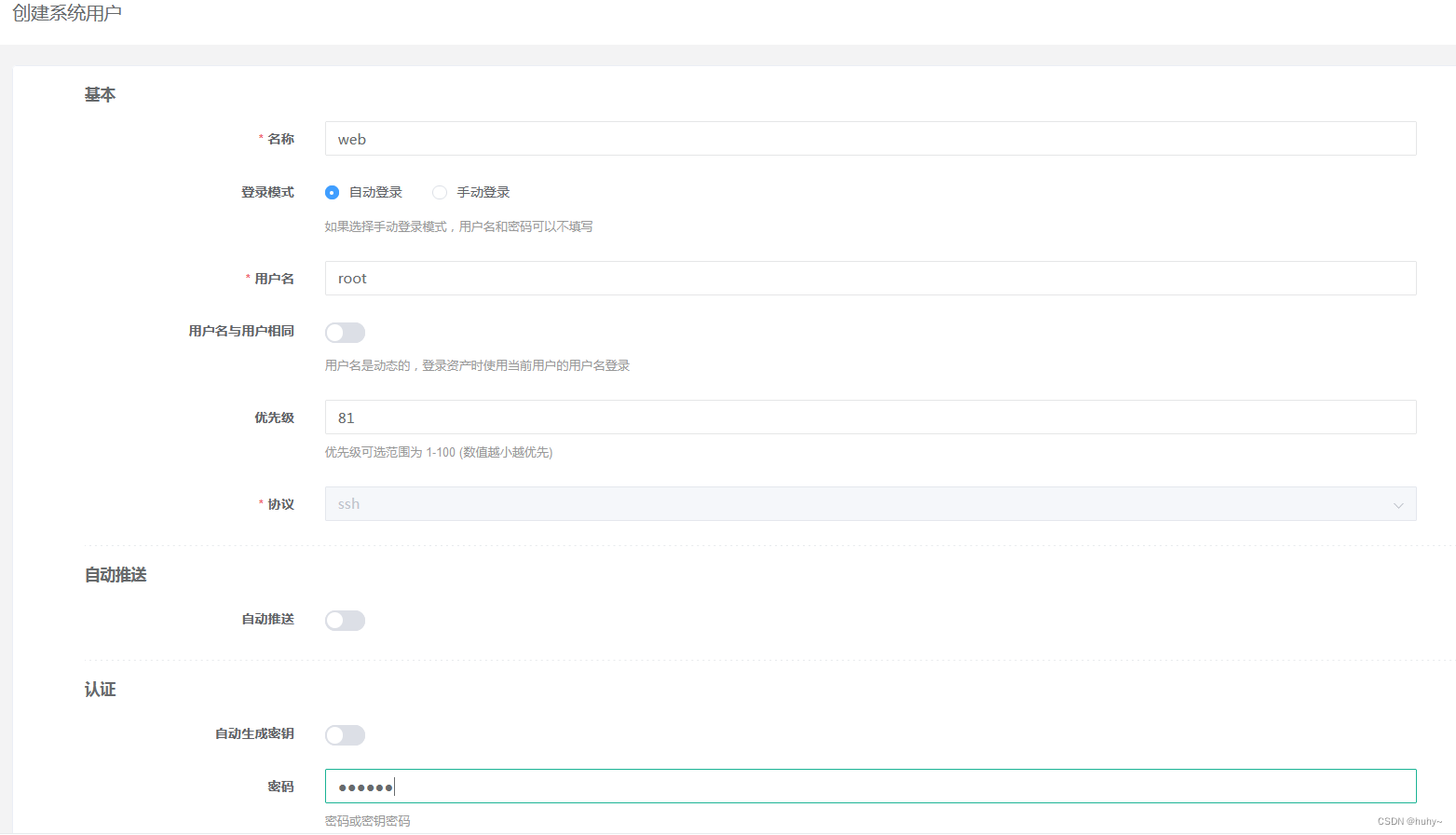

选择“系统用户”,单击右侧“创建”按钮,创建系统用户,选择主机协议“SSH”,设置用户名root,密码为服务器SSH密码并单击“提交”按钮,如图6所示:

图6 创建系统用户

单击左侧导航栏,展开“资产管理”项目,选择“资产列表”,单击右侧“创建”按钮,如图7所示:

图7 管理资产

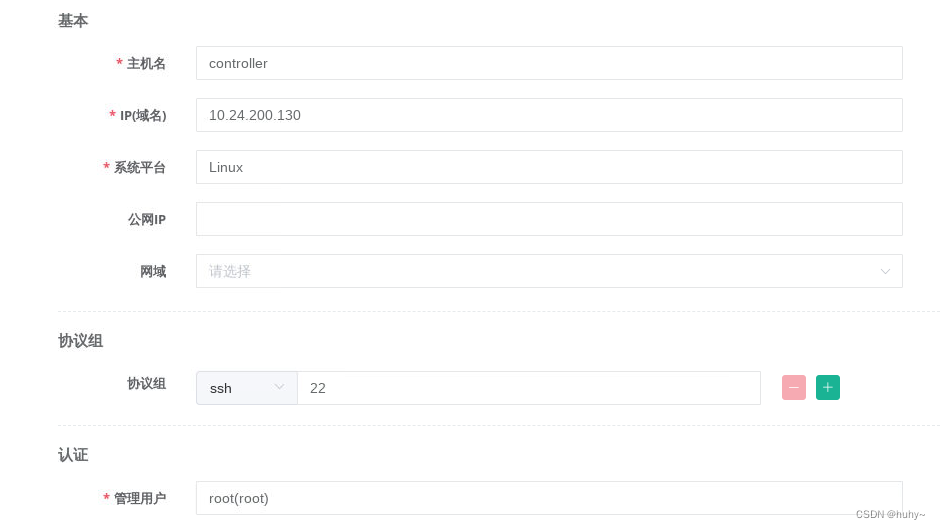

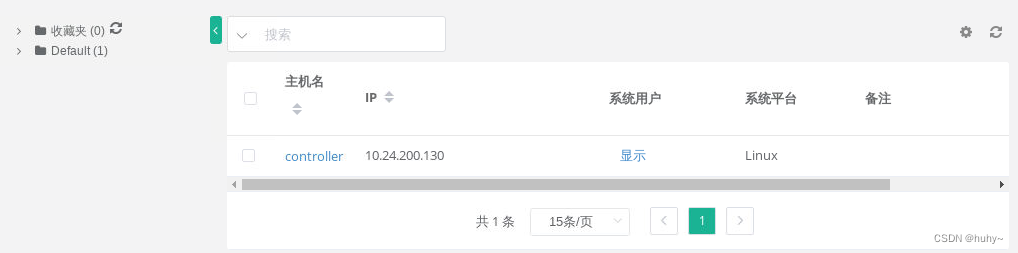

创建资产,将云平台主机(controller)加入资产内,如图8、图9所示:

图8 创建资产controller

图9 创建成功

(7)资产授权

单击左侧导航栏,展开“权限管理”项目,选择“资产授权”,单击右侧“创建”按钮,创建资产授权规则,如图10所示:

图10 创建资产授权规则

(8)测试连接

单击右上角管理员用户下拉菜单,选择“用户界面”,如图11所示:

图11 创建资产授权规则

如果未出现Default项目下的资产主机,单击收藏夹后“刷新”按钮进行刷新,如图12所示:

图12 查看资产

单击左侧导航栏,选择“Web终端”进入远程连接页面,如图13所示:

图13 进入远程连接终端

单击左侧Default,展开文件夹,单击controller主机,右侧成功连接主机,如图14所示:

图14 测试连接

至此OpenStack对接堡垒机案例操作成功。

1.2.10 完成私有云平台的调优或排错工作。(本任务只公布考试范围,不公布赛题)ss

*任务3 私有云运维开发(10分)* 1.3.1 编写Shell一键部署脚本

编写一键部署脚本,要求可以一键部署gpmall商城应用系统。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 # !/bin/bash # inital hostname hostnamectl set-hostname mall ip=`ip a | sed -nr "s/^ .*inet ([0-9].+)\/24 .*/\1/p"` cat >> /etc/hosts <<EOF 127.0.0.1 mysql.mall 127.0.0.1 redis.mall 127.0.0.1 zk1.mall 127.0.0.1 kafka.mall 127.0.0.1 zookeeper.mall EOF # config yum ignore mkdir /etc/yum.repos.d/repo mv /etc/yum.repos.d/Centos* /etc/yum.repos.d/repo cat >> /etc/yum.repos.d/local.repo <<EOF [centos] name=centos baseurl=file:///opt/centos enabled=1 gpgcheck=0 [gpmall-repo] name=gpmall baseurl=file:///root/gpmall_allinone/gpmall-repo enabled=1 gpgcheck=0 EOF mkdir /opt/centos mount /dev/cdrom /opt/centos # intal enviroment yum install -y java-1.8.0-openjdk* yum install -y redis yum install -y nginx yum install -y mariadb-server tar -zxvf zookeeper-3.4.14.tar.gz tar -zxvf kafka_2.11-1.1.1.tgz # mv zookeeper-3.4.14/conf/zoo_sample.cfg zookeeper-3.4.14/conf/zoo.cfg ./bin/zkServer.sh start echo $? # started kafka kafka_2.11-1.1.1/bin/kafka-server-start.sh -daemon kafka_2.11-1.1.1/config/server.properties echo $? # config mairadb mysqladmin -uroot password 123456 mysql -uroot -p000000 -e 'create database gpmall;' mysql -uroot -p000000 -e 'use gpmall;source gpmall/gpmall.sql;' systemctl enable --now mariadb # config redis sed -i 's/bind 127.0.0.1/#bind 127.0.0.1/' /etc/redis.conf sed -i 's/protected-mode yes/protected-mode no/' /etc/redis.conf systemctl enable --now redis # arrange front rm -rf /usr/share/nginx/html/* cp -rvf gpmall/dist/* /usr/share/nginx/html/ rm -rf /etc/nginx/conf.d/default.conf cp -rvf gpmall/default.conf /etc/nginx/conf.d/default.conf systemctl restart nginx # jar jar cd gpmall nohup java -jar shopping-provider-0.0.1-SNAPSHOT.jar & sleep 2 nohup java -jar user-provider-0.0.1-SNAPSHOT.jar & sleep 1 nohup java -jar gpmall-shopping-0.0.1-SNAPSHOT.jar & sleep 1 nohup java -jar gpmall-user-0.0.1-SNAPSHOT.jar & #

1.3.2 Ansible部署FTP服务

编写Ansible脚本,部署FTP服务。

1.3.3 Ansible部署Kafka服务

编写Playbook,部署的ZooKeeper和Kafka。

1.3.4 编写OpenStack容器云平台自动化运维工具。(本任务只公布考试范围,不公布赛题)

模块二 容器云(30分) 企业构建Kubernetes容器云集群,引入KubeVirt实现OpenStack到Kubernetes的全面转型,用Kubernetes来管一切虚拟化运行时,包含裸金属、VM、容器。同时研发团队决定搭建基于Kubernetes 的CI/CD环境,基于这个平台来实现DevOps流程。引入服务网格Istio,实现业务系统的灰度发布,治理和优化公司各种微服务,并开发自动化运维程序。

任务1 容器云服务搭建(5分) 2.1.1 部署容器云平台

使用OpenStack私有云平台创建两台云主机,分别作为Kubernetes集群的master节点和node节点,然后完成Kubernetes集群的部署,并完成Istio服务网格、KubeVirt虚拟化和Harbor镜像仓库的部署。

任务2 容器云服务运维(15分) 2.2.1 容器化部署Node-Exporter

编写Dockerfile文件构建exporter镜像,要求基于centos完成Node-Exporter服务的安装与配置,并设置服务开机自启。

1 2 3 4 5 6 7 8 9 10 11 # 将安装包上传到指定目录 # 编写Dockerfile文件 [root@k8s-master-node1 node-export]# cat Dockerfile FROM centos:7 WORKDIR /opt COPY node_exporter-1.3.1.linux-amd64.tar.gz /opt RUN tar -zxvf node_exporter-1.3.1.linux-amd64.tar.gz -C /opt EXPOSE 9100 CMD ["/opt/node_exporter-1.3.1.linux-amd64/node_exporter"]

2.2.2 容器化部署Alertmanager

编写Dockerfile文件构建alert镜像,要求基于centos:latest完成Alertmanager服务的安装与配置,并设置服务开机自启。

1 2 3 4 5 6 7 8 9 10 # 将安装包上传到指定目录 # 编写Dockerfile文件 [root@k8s-master-node1 alertmanager]# cat Dockerfile FROM centos:7 WORKDIR /opt COPY alertmanager-0.21.0.linux-amd64.tar.gz /opt RUN tar -zxvf alertmanager-0.21.0.linux-amd64.tar.gz -C /opt EXPOSE 9093 CMD ["/opt/alertmanager-0.21.0.linux-amd64/alertmanager","--config.file=/opt/alertmanager-0.21.0.linux-amd64/alertmanager.yml"]

2.2.3 容器化部署Grafana

编写Dockerfile文件构建grafana镜像,要求基于centos完成Grafana服务的安装与配置,并设置服务开机自启。

1 2 3 4 5 6 7 8 # 将安装包上传到指定目录 ,若联网则忽略,本实验联网 [root@k8s-master-node1 grafana]# cat Dockerfile FROM centos:7 WORKDIR /opt COPY grafana-8.1.2-1.x86_64.rpm /opt RUN yum install -y fontconfig urw-fonts grafana CMD ["grafana-server","--config /etc/sysconfig/grafana-server","--homepath /usr/share/grafana"]

2.2.4 容器化部署Prometheus

编写Dockerfile文件构建prometheus镜像,要求基于centos完成Promethues服务的安装与配置,并设置服务开机自启。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 # 将安装包上传到指定目录 [root@k8s-master-node1 prometheus]# cat Dockerfile prometheus-2.37.0.linux-amd64.tar.gz prometheus.yml # 编写Dockerfile文件 Dockerfile prometheus-2.37.0.linux-amd64.tar.gz prometheus.yml [root@k8s-master-node1 prometheus]# cat Dockerfile FROM centos:7 WORKDIR /opt COPY prometheus-2.37.0.linux-amd64.tar.gz /opt RUN tar zxvf prometheus-2.37.0.linux-amd64.tar.gz -C /opt COPY prometheus.yml /opt/prometheus-2.37.0.linux-amd64/prometheus.yml EXPOSE 9090 CMD ["/opt/prometheus-2.37.0.linux-amd64/prometheus"]

2.2.5 编排部署监控系统

编写docker-compose.yaml文件,使用镜像exporter、alert、grafana和prometheus完成监控系统的编排部署。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 [root@k8s-master-node1 monitor]# cat docker-compose.yml version: "3" services: prometheus: image: prometheus:v1 ports: - 9090:9090 node_export: image: node_exporter:v1 ports: - 9100:9100 depends_on: - prometheus grafana: image: grafana:v1 ports: - 3000:3000 depends_on: - prometheus alertmanager: image: 7e39952d313b ports: - 9103:9103 depends_on: - prometheus

2.2.6 安装Jenkins

将Jenkins部署到default命名空间下。要求完成离线插件的安装,设置Jenkins的登录信息和授权策略。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 # 创建serviceAccount,授权角色 [root@k8s-master-node1 jenkins]# cat rbac.yml apiVersion: v1 kind: ServiceAccount metadata: name: jenkins --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: jenkins rules: - apiGroups: - "" resources: - pods - events verbs: - get - list - watch - create - update - delete - apiGroups: - "" resources: - pods/exec verbs: - get - list - watch - create - update - delete - apiGroups: - "" resources: - pods/log verbs: - get - list - watch - create - update - delete - apiGroups: - "" resources: - secrets,events verbs: - get --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: jenkins roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: jenkins subjects: - kind: ServiceAccount name: jenkins namespace: default # 编写deploymen文件 [root@k8s-master-node1 jenkins]# cat jenkins-deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: app: jenkins name: jenkins spec: replicas: 1 selector: matchLabels: app: jenkins template: metadata: labels: app: jenkins spec: serviceAccountName: jenkins containers: - image: jenkins/jenkins name: jenkins ports: - containerPort: 8080 - containerPort: 50000 env: - name: JAVA_OPTS value: -Djava.util.logging.config.file=/var/jenkins_home/log.properties volumeMounts: - name: jenkins-home mountPath: /var/jenkins_home volumes: - name: jenkins-home hostPath: path: /var/jenkins_home ##注意改权限777 # 创建svc,暴露端口 [root@k8s-master-node1 jenkins]# cat jenkins-svc.yaml apiVersion: v1 kind: Service metadata: labels: app: jenkins name: jenkins spec: ports: - port: 80 name: http protocol: TCP targetPort: 8080 - port: 5000 name: agent protocol: TCP selector: app: jenkins type: NodePort

若插件获取失败,可通过修改/etc/resove.conf 修改后重启jenkins

2.2.7 安装GitLab

将GitLab部署到default命名空间下,要求设置root用户密码,新建公开项目,并将提供的代码上传到该项目。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 # 部署deploy文件 [root@k8s-master-node1 gitlab]# cat gitlab-deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: app: gitlab name: gitlab spec: replicas: 1 selector: matchLabels: app: gitlab template: metadata: labels: app: gitlab spec: nodeName: k8s-master-node1 containers: - image: gitlab/gitlab-ce name: gitlab ports: - containerPort: 80 - containerPort: 22 - containerPort: 443 volumeMounts: - name: gitlab-config mountPath: /etc/gitlab - name: gitlab-logs mountPath: /var/log/gitlab - name: gitlab-data mountPath: /var/opt/gitlab volumes: - name: gitlab-config hostPath: path: /data/gitlab/config - name: gitlab-logs hostPath: path: /data/gitlab/logs - name: gitlab-data hostPath: path: /data/gitlab/data # 部署svc服务,暴露端口 [root@k8s-master-node1 gitlab]# cat gitlab-svc.yaml apiVersion: v1 kind: Service metadata: name: gitlab-svc spec: ports: - port: 80 targetPort: 80 nodePort: 30001 selector: app: gitlab type: NodePort

2.2.8 配置Jenkins连接GitLab

在Jenkins中新建流水线任务,配置GitLab连接Jenkins,并完成WebHook的配置。

2.2.9 构建CI/CD

在流水线任务中编写流水线脚本,完成后触发构建,要求基于GitLab中的项目自动完成代码编译、镜像构建与推送、并自动发布服务到Kubernetes集群中。

2.2.10 服务网格:创建Ingress Gateway

将Bookinfo应用部署到default命名空间下,请为Bookinfo应用创建一个网关,使外部可以访问Bookinfo应用。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 # 进行istio注入 kubectl label namespace default istio-injection=enabled # Bookinfo部署 [root@k8s-master-node1 istio-1.13.4]# cat samples/bookinfo/platform/kube/bookinfo.yaml # Copyright Istio Authors # # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # # This file defines the services, service accounts, and deployments for the Bookinfo sample. # # # # # kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml -l account=reviews # kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml -l app=reviews,version=v3 # # # Details service # apiVersion: v1 kind: Service metadata: name: details labels: app: details service: details spec: ports: - port: 9080 name: http selector: app: details --- apiVersion: v1 kind: ServiceAccount metadata: name: bookinfo-details labels: account: details --- apiVersion: apps/v1 kind: Deployment metadata: name: details-v1 labels: app: details version: v1 spec: replicas: 1 selector: matchLabels: app: details version: v1 template: metadata: labels: app: details version: v1 spec: serviceAccountName: bookinfo-details containers: - name: details image: docker.io/istio/examples-bookinfo-details-v1:1.16.2 imagePullPolicy: IfNotPresent ports: - containerPort: 9080 securityContext: runAsUser: 1000 --- # # Ratings service # apiVersion: v1 kind: Service metadata: name: ratings labels: app: ratings service: ratings spec: ports: - port: 9080 name: http selector: app: ratings --- apiVersion: v1 kind: ServiceAccount metadata: name: bookinfo-ratings labels: account: ratings --- apiVersion: apps/v1 kind: Deployment metadata: name: ratings-v1 labels: app: ratings version: v1 spec: replicas: 1 selector: matchLabels: app: ratings version: v1 template: metadata: labels: app: ratings version: v1 spec: serviceAccountName: bookinfo-ratings containers: - name: ratings image: docker.io/istio/examples-bookinfo-ratings-v1:1.16.2 imagePullPolicy: IfNotPresent ports: - containerPort: 9080 securityContext: runAsUser: 1000 --- # # Reviews service # apiVersion: v1 kind: Service metadata: name: reviews labels: app: reviews service: reviews spec: ports: - port: 9080 name: http selector: app: reviews --- apiVersion: v1 kind: ServiceAccount metadata: name: bookinfo-reviews labels: account: reviews --- apiVersion: apps/v1 kind: Deployment metadata: name: reviews-v1 labels: app: reviews version: v1 spec: replicas: 1 selector: matchLabels: app: reviews version: v1 template: metadata: labels: app: reviews version: v1 spec: serviceAccountName: bookinfo-reviews containers: - name: reviews image: docker.io/istio/examples-bookinfo-reviews-v1:1.16.2 imagePullPolicy: IfNotPresent env: - name: LOG_DIR value: "/tmp/logs" ports: - containerPort: 9080 volumeMounts: - name: tmp mountPath: /tmp - name: wlp-output mountPath: /opt/ibm/wlp/output securityContext: runAsUser: 1000 volumes: - name: wlp-output emptyDir: {} - name: tmp emptyDir: {} --- apiVersion: apps/v1 kind: Deployment metadata: name: reviews-v2 labels: app: reviews version: v2 spec: replicas: 1 selector: matchLabels: app: reviews version: v2 template: metadata: labels: app: reviews version: v2 spec: serviceAccountName: bookinfo-reviews containers: - name: reviews image: docker.io/istio/examples-bookinfo-reviews-v2:1.16.2 imagePullPolicy: IfNotPresent env: - name: LOG_DIR value: "/tmp/logs" ports: - containerPort: 9080 volumeMounts: - name: tmp mountPath: /tmp - name: wlp-output mountPath: /opt/ibm/wlp/output securityContext: runAsUser: 1000 volumes: - name: wlp-output emptyDir: {} - name: tmp emptyDir: {} --- apiVersion: apps/v1 kind: Deployment metadata: name: reviews-v3 labels: app: reviews version: v3 spec: replicas: 1 selector: matchLabels: app: reviews version: v3 template: metadata: labels: app: reviews version: v3 spec: serviceAccountName: bookinfo-reviews containers: - name: reviews image: docker.io/istio/examples-bookinfo-reviews-v3:1.16.2 imagePullPolicy: IfNotPresent env: - name: LOG_DIR value: "/tmp/logs" ports: - containerPort: 9080 volumeMounts: - name: tmp mountPath: /tmp - name: wlp-output mountPath: /opt/ibm/wlp/output securityContext: runAsUser: 1000 volumes: - name: wlp-output emptyDir: {} - name: tmp emptyDir: {} --- # # Productpage services # apiVersion: v1 kind: Service metadata: name: productpage labels: app: productpage service: productpage spec: ports: - port: 9080 name: http selector: app: productpage --- apiVersion: v1 kind: ServiceAccount metadata: name: bookinfo-productpage labels: account: productpage --- apiVersion: apps/v1 kind: Deployment metadata: name: productpage-v1 labels: app: productpage version: v1 spec: replicas: 1 selector: matchLabels: app: productpage version: v1 template: metadata: labels: app: productpage version: v1 spec: serviceAccountName: bookinfo-productpage containers: - name: productpage image: docker.io/istio/examples-bookinfo-productpage-v1:1.16.2 imagePullPolicy: IfNotPresent ports: - containerPort: 9080 volumeMounts: - name: tmp mountPath: /tmp securityContext: runAsUser: 1000 volumes: - name: tmp emptyDir: {} --- 部署svc [root@k8s-master-node1 networking]# cat bookinfo-gateway.yaml apiVersion: networking.istio.io/v1alpha3 kind: Gateway metadata: name: bookinfo-gateway spec: selector: istio: ingressgateway # use istio default controller servers: - port: number: 80 name: http protocol: HTTP hosts: - "*" --- apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: bookinfo spec: hosts: - "*" gateways: - bookinfo-gateway http: - match: - uri: exact: /productpage - uri: prefix: /static - uri: exact: /login - uri: exact: /logout - uri: prefix: /api/v1/products route: - destination: host: productpage port: number: 9080

2.2.11 KubeVirt运维:创建VM

使用提供的镜像在default命名空间下创建一台VM,名称为exam,指定VM的内存、CPU、网卡和磁盘等配置。

在使用vmware虚拟机时,需要开启vmware虚拟化

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 # 服务启动完成后启动这个命令 [root@master ~]# kubectl -n kubevirt wait kv kubevirt --for condition=Available kubevirt.kubevirt.io/kubevirt condition met [root@k8s-master-node1 vm]# cat virtual.yaml apiVersion: kubevirt.io/v1 kind: VirtualMachine metadata: name: exam spec: running: false template: metadata: labels: kubevirt.io/size: small kubevirt.io/domain: exam spec: domain: devices: disks: - name: containerdisk disk: bus: virtio - name: cloudinitdisk disk: bus: virtio interfaces: - name: default masquerade: {} resources: requests: memory: 64M networks: - name: default pod: {} volumes: - name: containerdisk containerDisk: image: quay.io/kubevirt/cirros-container-disk-demo - name: cloudinitdisk cloudInitNoCloud: userDataBase64: SGkuXG4= [root@master kubevirt]# kubectl apply -f virtual.yaml virtualmachine.kubevirt.io/exam created [root@master kubevirt]# kubectl get vm NAME AGE VOLUME exam 21m

使用方法

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 # 启动实例 [root@master kubevirt]# virtctl start exam VM exam was scheduled to start [root@master kubevirt]# kubectl get vmi NAME AGE PHASE IP NODENAME exam 62s Running 10.244.0.15 master # 进入虚拟机 [root@master kubevirt]# virtctl console exam Successfully connected to vm-cirros console. The escape sequence is ^] login as 'cirros' user. default password: 'gocubsgo'. use 'sudo' for root. vm-cirros login: cirros Password: $ ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc pfifo_fast qlen 1000 link/ether 2e:3e:2a:46:29:94 brd ff:ff:ff:ff:ff:ff inet 10.244.0.16/24 brd 10.244.0.255 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::2c3e:2aff:fe46:2994/64 scope link tentative flags 08 valid_lft forever preferred_lft forever $ # 启动和停止命令 # virtctl start vm # virtctl stop vm # vm作为服务公开 VirtualMachine可以作为服务公开。实际服务将在 VirtualMachineInstance 启动后可用。 [root@master kubevirt]# virtctl expose virtualmachine exam --name vmiservice-node --target-port 22 --port 24 --type NodePort Service vmiservice-node successfully exposed for virtualmachine vm-cirros [root@master kubevirt]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 95d vmiservice-node NodePort 10.106.62.191 <none> 24:31912/TCP 3s

2.2.12 完成容器云平台的调优或排错工作。(本任务只公布考试范围,不公布赛题)

任务3 容器云运维开发(10分) 2.3.1 管理job服务

Kubernetes Python运维脚本开发-使用SDK方式管理job服务。

2.3.2 自定义调度器

Kubernetes Python运维脚本开发-使用Restful API方式管理调度器。

2.3.3 编写Kubernetes容器云平台自动化运维工具。(本任务只公布考试范围,不公布赛题)

模块三 公有云(40分) 企业选择国内公有云提供商,选择云主机、云网络、云硬盘、云防火墙、负载均衡等服务,可创建Web服务,共享文件存储服务,数据库服务,数据库集群等服务。搭建基于云原生的DevOps相关服务,构建云、边、端一体化的边缘计算系统,并开发云应用程序。

根据上述公有云平台的特性,完成公有云中的各项运维工作。

*任务1 公有云服务搭建(5分)* 3.1.1 私有网络管理

在公有云中完成虚拟私有云网络的创建。

3.1.2 云实例管理

登录公有云平台,创建两台云实例虚拟机。

3.1.3 管理数据库

使用intnetX-mysql网络创建两台chinaskill-sql-1和chinaskill-sql-2云服务器,并完成MongoDB安装。

3.1.4 主从数据库

在chinaskill-sql-1和chinaskill-sql-2云服务器中配置MongoDB主从数据库。

3.1.5 node环境管理

使用提供的压缩文件,安装Node.js环境。

3.1.6 安全组管理

根据要求,创建一个安全组。

3.1.7 RocketChat上云

使用http服务器提供文件,将Rocket.Chat应用部署上云。

3.1.8 NAT网关

根据要求创建一个公网NAT网关。

3.1.9云服务器备份

创建一个云服务器备份存储库名为server_backup,容量为100G。将ChinaSkill-node-1云服务器制作镜像文件chinaskill-image。

3.1.10 负载均衡器

根据要求创建一个负载均衡器chinaskill-elb。

3.1.11 弹性伸缩管理

根据要求新建一个弹性伸缩启动配置。

*任务2 公有云服务运维(10分)* 3.2.1 云容器引擎

在公有云上,按照要求创建一个x86架构的容器云集群。

3.2.2 云容器管理

使用插件管理在kcloud容器集群中安装Dashboard可视化监控界面。

3.2.3 使用kubectl操作集群

在kcloud集群中安装kubectl命令,使用kubectl命令管理kcloud集群。

3.2.4 安装Helm

使用提供的Helm软件包,在kcloud集群中安装Helm服务。

3.2.5 根据提供的chart包mariadb-7.3.14.tgz部署mariadb服务,修改mariadb使用NodePort模式对其进行访问。

3.2.6 在k8s集群中创建mariadb命名空间,根据提供的chart包mariadb-7.3.14.tgz修改其配置,使用NodePort模式对其进行访问。

任务3 公有云运维开发(10分) 3.3.1 开发环境搭建

创建一台云主机,并登录此云服务器,安装Python3.68运行环境与SDK依赖库。

3.3.2 安全组管理

调用api安全组的接口,实现安全组的增删查改。

3.3.3 安全组规则管理

调用SDK安全组规则的方法,实现安全组规则的增删查改。

3.3.4 云主机管理

调用SDK云主机管理的方法,实现云主机的的增删查改。

3.3.5 完成公有云平台自动化运维程序开发。(本任务只公布考试范围,不公布赛题)

*任务4 边缘计算系统运维(10分)* 3.4.1 云端部署

构建Kubernetes容器云平台,云端部署KubeEdge CloudCore云测模块,并启动cloudcore服务。

3.4.2 边端部署

在边侧部署KubeEdge EdgeCore边侧模块,并启动edgecore服务。

3.4.3 边缘应用部署

通过边缘计算平台完成应用场景镜像部署与调试。(本任务只公布考试范围,不公布赛题)

*任务5 边缘计算云应用开发(5分)* 3.5.1 对接边缘计算系统,完成云应用微服务开发。(本任务只公布考试范围,不公布赛题)